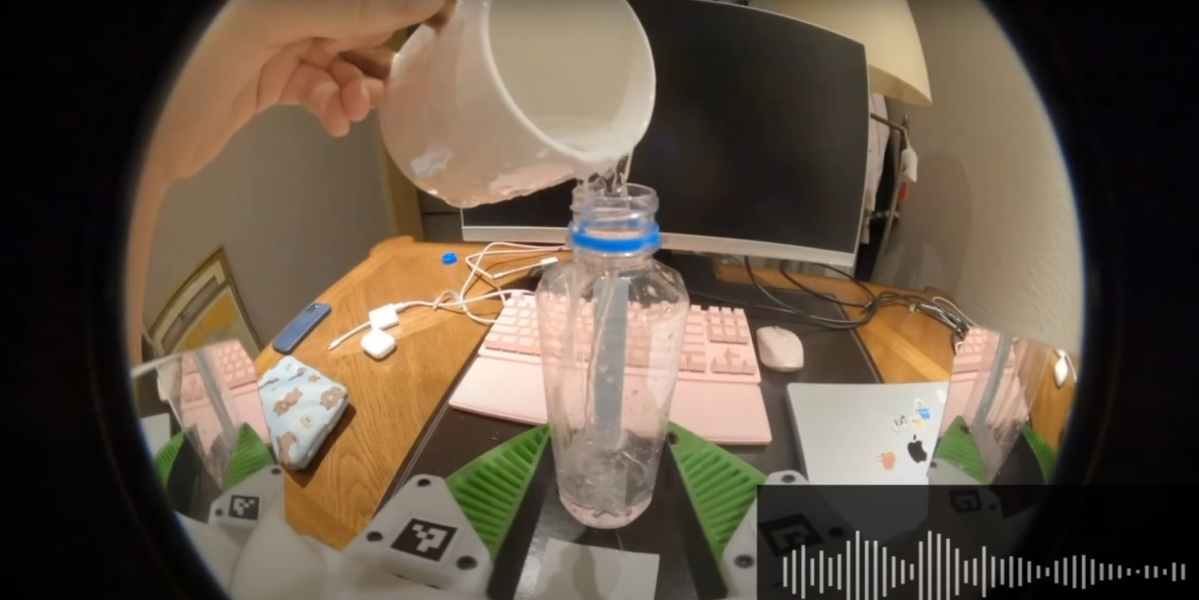

Researchers at the Robotics and Embodied AI Lab at Stanford College set out to change that. They very first crafted a system for collecting audio info, consisting of a GoPro camera and a gripper with a microphone intended to filter out history noise. Human demonstrators utilised the gripper for a range of residence responsibilities and then used this information to train robotic arms how to execute the undertaking on their own. The team’s new teaching algorithms enable robots gather clues from audio indicators to conduct additional effectively.

“Thus considerably, robots have been education on videos that are muted,” suggests Zeyi Liu, a PhD university student at Stanford and lead creator of the study. “But there is so considerably beneficial information in audio.”

To take a look at how much more thriving a robot can be if it is able of “listening,” the scientists selected 4 jobs: flipping a bagel in a pan, erasing a whiteboard, putting two Velcro strips jointly, and pouring dice out of a cup. In each and every task, sounds present clues that cameras or tactile sensors wrestle with, like realizing if the eraser is correctly speaking to the whiteboard or no matter whether the cup is made up of dice.

Immediately after demonstrating every job a couple of hundred periods, the team when compared the achievement rates of education with audio and education only with eyesight. The final results, released in a paper on arXiv that has not been peer-reviewed, were being promising. When applying eyesight by itself in the dice examination, the robot could notify 27% of the time if there were dice in the cup, but that rose to 94% when sound was provided.

It is not the 1st time audio has been used to coach robots, claims Shuran Track, the head of the lab that developed the research, but it’s a large step toward carrying out so at scale: “We are making it less difficult to use audio gathered ‘in the wild,’ fairly than remaining restricted to amassing it in the lab, which is much more time consuming.”

The investigation indicators that audio may possibly grow to be a far more sought-soon after data resource in the race to teach robots with AI. Scientists are instructing robots a lot quicker than at any time ahead of utilizing imitation studying, showing them hundreds of examples of jobs getting performed in its place of hand-coding just about every a person. If audio could be gathered at scale applying products like the just one in the study, it could give them an totally new “sense,” serving to them more swiftly adapt to environments where visibility is confined or not practical.

“It’s protected to say that audio is the most understudied modality for sensing [in robots],” says Dmitry Berenson, affiliate professor of robotics at the College of Michigan, who was not associated in the study. Which is for the reason that the bulk of investigate on training robots to manipulate objects has been for industrial pick-and-place responsibilities, like sorting objects into bins. Individuals duties never gain much from sound, instead relying on tactile or visual sensors. But as robots broaden into duties in properties, kitchens, and other environments, audio will turn out to be more and more valuable, Berenson states.

Take into consideration a robot seeking to locate which bag or pocket incorporates a established of keys, all with constrained visibility. “Maybe even just before you touch the keys, you listen to them variety of jangling,” Berenson states. “That’s a cue that the keys are in that pocket rather of some others.”

Nonetheless, audio has limits. The crew factors out sound will not be as handy with so-referred to as gentle or adaptable objects like apparel, which don’t produce as a great deal usable audio. The robots also struggled with filtering out the audio of their very own motor noises all through tasks, since that sounds was not present in the teaching data generated by humans. To deal with it, the researchers needed to add robot sounds—whirs, hums, and actuator noises—into the training sets so the robots could discover to tune them out.

The next move, Liu says, is to see how a great deal better the designs can get with more information, which could suggest adding extra microphones, amassing spatial audio, and incorporating microphones into other sorts of knowledge-selection units.