FAMOUS doctors are being “deepfaked” on social media to dupe people into health scams or promotions, according to an investigation.

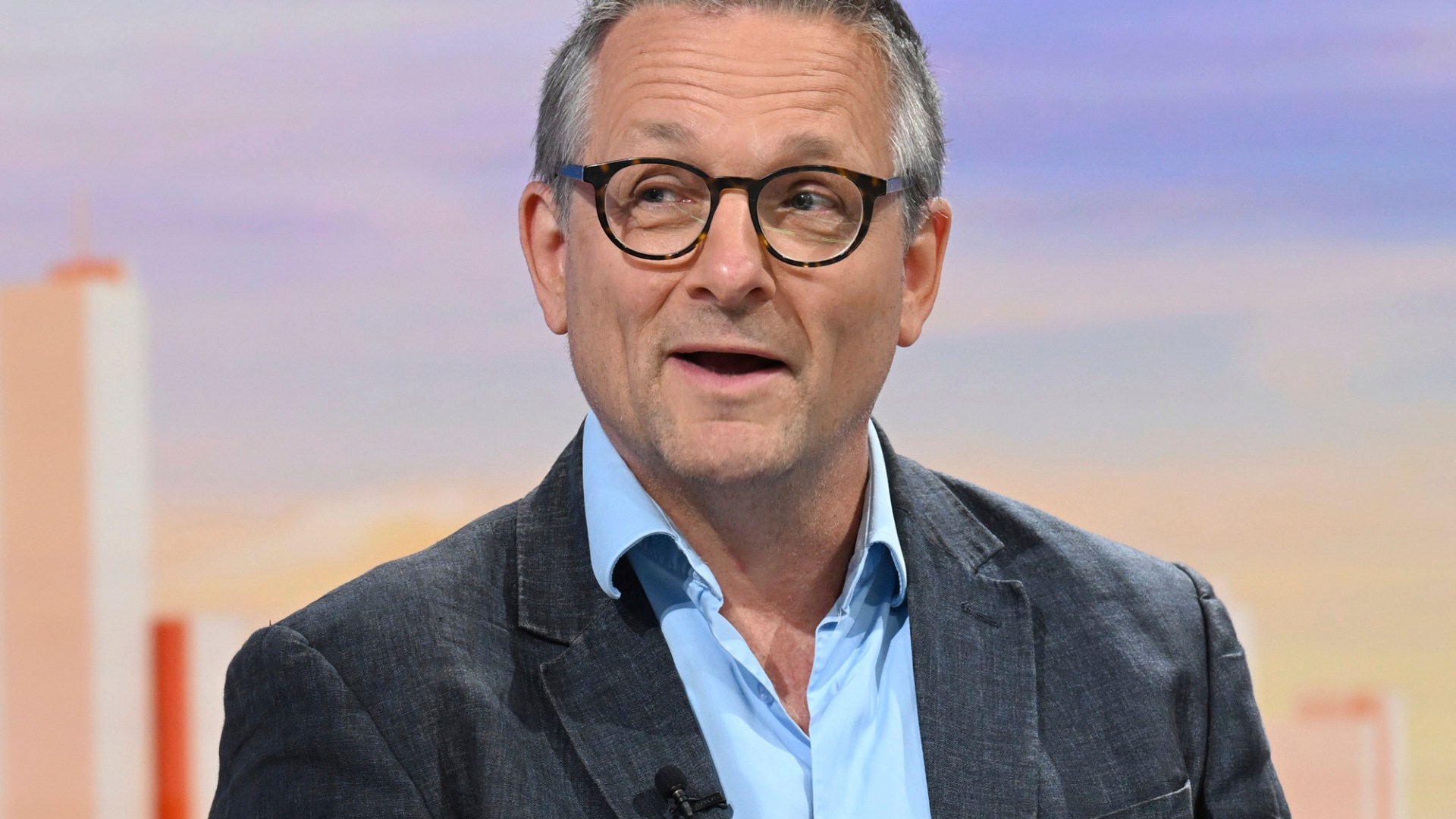

Online chancers are using artificial intelligence to make it look like top docs like the late Michael Mosley, Hilary Jones and Rangan Chatterjee are promoting their products.

5

5

5

5

Research suggests the public can only tell fake from real in about half of cases.

The British Medical Journal investigation warned viewers are at risk of being suckered into buying pointless or potentially harmful products.

Dr Hilary Jones, a regular on Lorraine and Good Morning Britain, said he hires an expert to trawl the internet for fake videos of him and have them taken down.

He said: “There’s been a significant increase in this kind of activity.

Read more on health scams

“Even if you take them down, they just pop up the next day under a different name.”

Deepfakes are videos created using AI to make it look like someone has said or done something they have not.

Computer programmes can use old sound and video clips to recreate someone’s voice or appearance and make them do new things.

‘Innocent doctors’ embroiled in nonsense

Retired doctor John Cormack, who conducted the research, said use of the fake videos is on the rise.

He said: “Doctors who are perfectly innocent get embroiled in ideas such as big pharma and the health services withholding the cure for diabetes – and if you take these expensive pills for a short period of time it can cure you.

“The bottom line is, it’s much cheaper to spend your cash on making videos than it is on doing research and coming up with new products and getting them to market in the conventional way.”

He said he found clips of well-known doctors promoting hemp gummies and bogus products that claim to fix high blood pressure and diabetes.

A spokesperson for Meta, the owner of Facebook and Instagram, said: “We will be investigating the examples highlighted by the British Medical Journal.

“We don’t permit content that intentionally deceives or seeks to defraud others, and we’re constantly working to improve detection and enforcement.

“We encourage anyone who sees content that might violate our policies to report it so we can investigate and take action.”

WHAT ARE DEEPFAKES?

ARTIFICIAL intelligence is evolving at lightspeed and one of the most concerning features is its ability to make “deepfakes”, which show people doing and saying things that they never did in real life.

Here’s what you need to know…

- Deepfakes use artificial intelligence and machine learning to produce face-swapped videos with barely any effort

- They can be used to create realistic videos that make celebrities appear as though they’re saying something they didn’t

- Deepfakes have also been used by sickos to make fake porn videos that feature the faces of celebrities or ex-lovers

- To create the videos, users first track down an XXX clip featuring a porn star that looks like an actress

- They then feed an app with hundreds – and sometimes thousands – of photos of the victim’s face

- A machine learning algorithm swaps out the faces frame-by-frame until it spits out a realistic, but fake, video

- To help other users create these videos, pervs upload “facesets”, which are huge computer folders filled with a celebrity’s face that can be easily fed through the “deepfakes” app

- Simon Miles, of intellectual property specialists Edwin Coe, told The Sun that the fake sex tapes could be considered an “unlawful intrusion” into the privacy of a celeb

- He also added that celebrities could request that the content be taken down, but warned: “The difficulty is that damage has already been done.”

5