Apple claims its privacy-focused process will first try to fulfill AI duties locally on the system by itself. If any knowledge is exchanged with cloud products and services, it will be encrypted and then deleted afterward. The company also says the course of action, which it phone calls Private Cloud Compute, will be matter to verification by impartial security scientists.

The pitch offers an implicit contrast with the likes of Alphabet, Amazon, or Meta, which gather and store monumental amounts of personal info. Apple suggests any personalized data handed on to the cloud will be utilized only for the AI endeavor at hand and will not be retained or accessible to the company, even for debugging or quality command, immediately after the model completes the request.

Just set, Apple is saying individuals can rely on it to assess unbelievably sensitive data—photos, messages, and e-mail that contain intimate particulars of our lives—and produce automated expert services centered on what it finds there, devoid of actually storing the details on line or building any of it vulnerable.

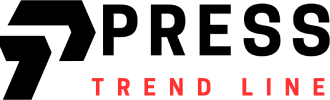

It confirmed a few examples of how this will function in forthcoming variations of iOS. Rather of scrolling via your messages for that podcast your friend despatched you, for case in point, you could simply ask Siri to uncover and participate in it for you. Craig Federighi, Apple’s senior vice president of application engineering, walked via a different circumstance: an electronic mail arrives in pushing again a work conference, but his daughter is appearing in a perform that evening. His cell phone can now uncover the PDF with information about the general performance, predict the neighborhood traffic, and permit him know if he’ll make it on time. These abilities will extend past apps built by Apple, making it possible for developers to faucet into Apple’s AI too.

Since the business gains more from hardware and solutions than from adverts, Apple has considerably less incentive than some other companies to obtain private on the web knowledge, letting it to position the Apple iphone as the most private machine. Even so, Apple has beforehand found by itself in the crosshairs of privateness advocates. Stability flaws led to leaks of specific photographs from iCloud in 2014. In 2019, contractors were uncovered to be listening to intimate Siri recordings for excellent regulate. Disputes about how Apple handles information requests from legislation enforcement are ongoing.

The first line of defense versus privacy breaches, in accordance to Apple, is to stay away from cloud computing for AI duties every time possible. “The cornerstone of the personal intelligence program is on-machine processing,” Federighi says, indicating that quite a few of the AI designs will run on iPhones and Macs fairly than in the cloud. “It’s mindful of your particular facts without having accumulating your personal data.”

That presents some specialized obstacles. Two years into the AI boom, pinging types for even easy responsibilities however demands huge amounts of computing energy. Carrying out that with the chips applied in phones and laptops is complicated, which is why only the smallest of Google’s AI types can be operate on the company’s phones, and everything else is accomplished through the cloud. Apple suggests its ability to take care of AI computations on-unit is because of to many years of investigate into chip style and design, major to the M1 chips it commenced rolling out in 2020.

However even Apple’s most advanced chips simply cannot tackle the whole spectrum of duties the organization guarantees to carry out with AI. If you inquire Siri to do some thing complex, it may need to move that request, along with your facts, to versions that are out there only on Apple’s servers. This move, security experts say, introduces a host of vulnerabilities that may expose your facts to outside the house undesirable actors, or at the very least to Apple alone.